Maximizing One's Output: Ways to Best Use Anonymizers

In today's digital landscape, optimizing your output when it comes to data extraction or online automation is essential. One of the most powerful ways to improve your efficiency is by leveraging proxies. Proxies function as intermediaries between your device and the internet, allowing you to access content safely while maintaining anonymity. If you're scraping data, running various bots, or accessing region-locked content, the right proxy tools can make all the difference.

This article will discuss various tools and strategies for leveraging proxies properly. From proxy scrapers that help you collect lists of usable proxies to proxy checkers that ensure their reliability and speed, we'll cover everything you need to know. Let us also explore the different types of proxies, such as HTTP and SOCKS, and analyze the advantages of dedicated versus shared proxies. By understanding these elements and using the top available tools, you can considerably enhance your web scraping efforts and streamline processes seamlessly.

Grasping Proxy Servers and Different Types

Proxies act as go-betweens between a individual and the internet, facilitating requests and replies as they concealing the user's real IP location. They serve various functions, such as enhancing anonymity, increasing security, and enabling web scraping by overcoming geo-blocks or barriers. Comprehending how proxy servers work is important for effectively leveraging them in processes like information gathering and automation.

There are various types of proxy servers, with HTTP and SOCKS being the most commonly used. HTTP proxies are particularly made for managing web requests, which makes them perfect for standard browsing and web scraping tasks. SOCKS proxy servers, in contrast, handle multiple kinds of data, such as electronic mail and File Transfer Protocol, and are available in two versions: SOCKS version 4 and SOCKS5. The main difference lies in SOCKS version 5's ability to handle authentication and TCP, enabling more flexible and secure connections.

When selecting a proxy, it's important to evaluate the type and type based on your requirements. Private proxy servers offer exclusive resources for individuals, guaranteeing better functionality and privacy. In contrast, public proxies are used by multiple users, which makes them less dependable but often accessible for no cost. Understanding the distinctions and uses of each type of proxy aids users make informed decisions for effective web scraping and automation activities.

Instruments for Scraping Proxies

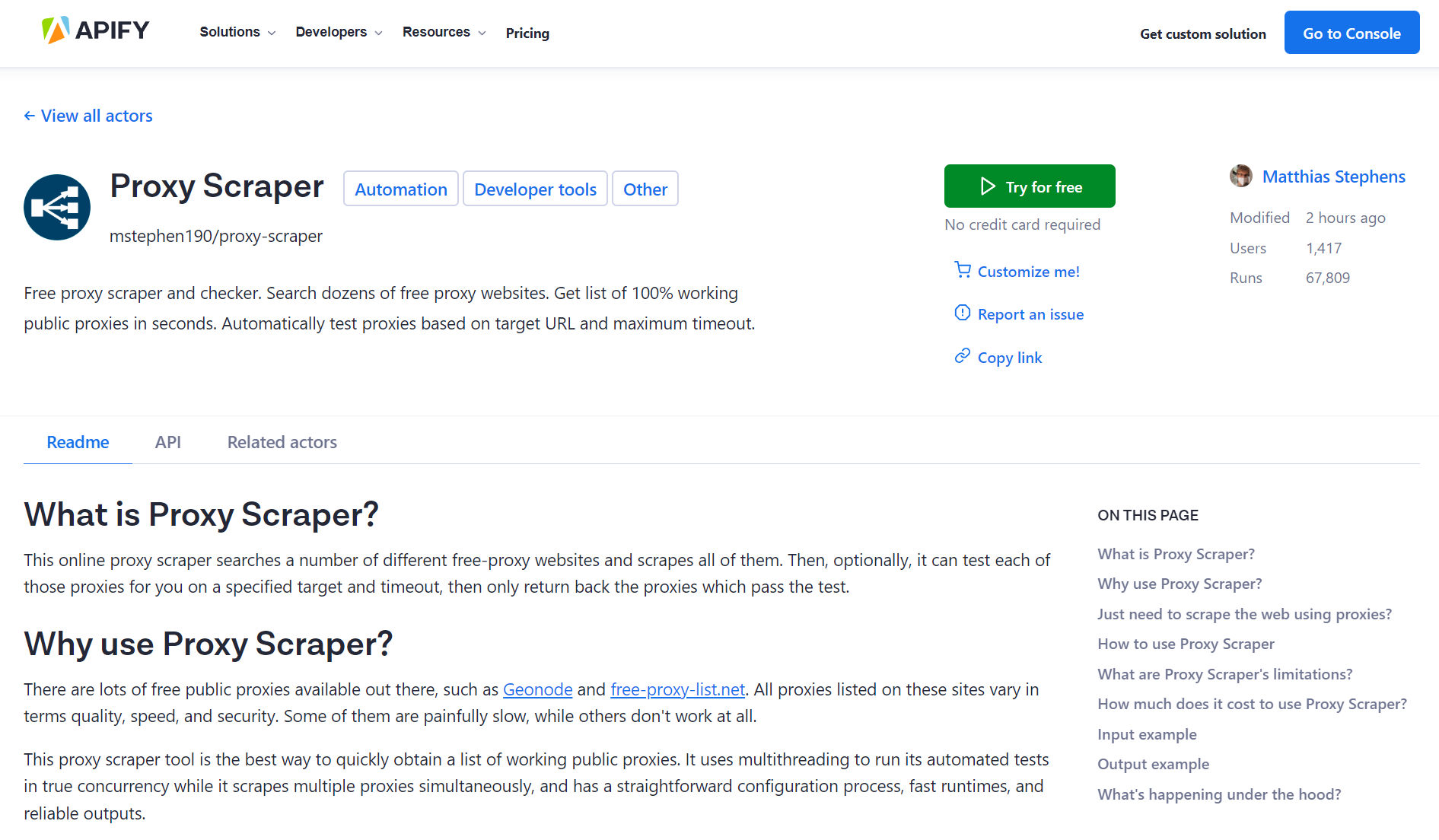

When it comes to collecting proxy servers, having the appropriate tools is vital for productivity and effectiveness. A trustworthy proxy collector is key for gathering a varied list of proxies that can be used for a multitude of applications, such as web data extraction or automation. Many people turn to free proxy collectors, but it's vital to evaluate their efficiency and reliability to make sure that they meet your exact needs. Quick proxy scrapers can expedite the procedure of acquiring proxy servers, allowing you to increase your results substantially.

Once you've compiled a list of prospective proxy servers, utilizing a powerful proxy verifier is crucial. The top proxy verifiers not only confirm the performance of each proxy but also assess their speed and privacy. Resources like a proxy validation tool help remove unusable proxies, permitting you to concentrate on reliable links. Many individuals appreciate solutions that combine both collecting and validation functions, providing an all-in-one solution for managing proxies well.

For those keen in advanced capabilities, dedicated resources such as the ProxyStorm software can provide improved features for identifying high-quality proxy servers. Additionally, understanding best tools to scrape free proxies between HTTP proxies will help you pick the best proxy suppliers for web scraping and various tasks. With a solid grasp of the most effective tools to scrape no-cost proxy servers and evaluate their robustness, you'll be well-equipped to move through the realm of proxy use effectively.

Inspecting and Assessing Proxies

When using proxies, ensuring their functionality is vital for best performance. A dependable proxy checker is essential to check if your proxies are operating as intended. These tools can swiftly scan through your proxy list, locating which proxies are responsive and functioning. Fast proxy harvesters and checkers can greatly reduce the time spent in this activity, allowing you to concentrate on your data extraction rather than solving non-functional proxies.

In addition to basic functionality checks, verifying proxy speed is yet another important aspect of proxy management. Tools like FastProxy can provide details into how promptly a proxy replies to queries. This information is essential, especially when dealing with web scraping, where speed can influence the efficiency of data extraction. For tasks that require high anonymity or performance, testing the speed of each proxy will assist you choose the most suitable proxies from your array.

A further key factor in handling proxies is safety and anonymity. Testing for anonymity levels is essential to prevent detection when scraping websites. Using a proxy verification tool can help determine if a proxy is open, anonymous, or superior. Understanding the differences between HTTP proxies, SOCKS4, and SOCKS5 proxies also aids in selecting the suitable for your purposes. By integrating functionality checks, speed verification, and anonymity tests, you can make sure that your proxy arrangement is strong and fit for your web scraping projects.

Employing Proxy Services for Web Scraping

Web scraping is the process of extracting content from websites, but many sites have measures to restrict automated requests. Employing proxies can help you navigate around these restrictions, ensuring that your data extraction tasks operate seamlessly. A proxy server acts as an middleman between your scraper and the target site, allowing you to issue requests from various IP points. This reduces the risk of being blocked or throttled by the site, letting you to gather increased data without issues.

When selecting proxies for data extraction, it is essential to choose between exclusive and open proxy servers. Private proxy servers provide better performance and disguise, making them perfect for large scale scraping projects. Public proxy servers, while frequently free, can be problematic and slow, which can impede your data extraction activities. Additionally, using techniques to check proxy speed and privacy is important, ensuring that you get top-notch, fast proxies that satisfy your data extraction needs.

Using the appropriate software can boost your web scraping experience. There are many proxy harvesting tools and validation tools available, addressing various needs. Fast proxy harvesting tools and the best validation tools can aid you find and verify proxies efficiently. By integrating proxy harvesting with Python or using reliable list-generation tools online, you can automate the task of acquiring top-notch proxies to enhance your data extraction tasks.

Best Approaches for Proxy Server Efficiency

To maximize efficiency while utilizing proxies, it is essential to comprehend the kinds of proxy servers available and pick the right one for your needs. HTTP proxy servers are ideal for web scraping tasks, as they can handle regular web traffic efficiently. On the flip side, SOCKS proxy servers, especially SOCKS5, offer enhanced versatility and handle various types of data including TCP and UDP. Evaluate the needs of your task and choose between dedicated or public proxies based on your need for security and performance.

Regularly monitoring and verifying your proxy servers is crucial to maintaining maximum output. Utilize a trustworthy proxy checker to test the velocity and privacy of your proxies. This will help you spot non-functional or laggy proxies that could hinder your scraping efforts. Tools like the ProxyStorm service can streamline this task by providing in-depth validation features. Keeping your list of proxies remains current will conserve time and efforts and allow you to concentrate on your core goals.

In conclusion, take advantage of automated solutions to boost your proxy management. Incorporating a proxy extraction tool into your workflow can accelerate the acquisition of fresh lists of proxies and guarantee that you are always working with premium proxies. Combining this with a tool for proxy validation will further enhance processes, allowing you to mechanize the process of finding and testing proxy servers efficiently. This not only boosts your effectiveness but also reduces the labor-intensive burden involved in managing proxies.

Paid vs Commercial Proxy Options

When deciding between free and premium proxy choices, it's important to grasp the balancing act involved. No-cost proxies often draw in users due to their affordability, making them attractive for light web browsing or small-scale projects. However, these proxies carry significant disadvantages, including poor connections, decreased speeds, and likely security risks since they are often utilized by numerous users. Additionally, complimentary proxies may not offer anonymity, making them unsuitable for critical tasks like web scraping or automation.

On the contrary, premium proxies typically deliver a superior level of support and trustworthiness. They are often more safe, offering dedicated servers that ensure speedier speeds and improved uptime. Commercial choices also usually come with features like proxy verification tools and superior customer support, making them perfect for businesses that rely on reliable performance for data extraction and web scraping. Moreover, premium offers often include availability to a selection of proxy types, such as HTTP, SOCKS4, and SOCKS5, allowing users to pick the right proxy for their unique needs.

Ultimately, the selection between free and premium proxies depends on the user's requirements. For those engaging in intensive web scraping, automation, or requiring reliable confidentiality, investing in top-notch paid proxies is a better choice. Conversely, if the demands are limited or exploratory, free proxy choices may be sufficient. Assessing the specific use case and grasping the advantages of each choice can help users make the correct choice for their proxy application.

Streamlining and Proxy Usage Usage

Proxies play a key role in automated processes, enabling users to perform tasks such as data gathering, data harvesting, and SEO analysis without being banned or limited. By integrating a trustworthy proxy scraper into your routine, you can quickly gather large amounts of data while reducing the chance of detection by online platforms. Automation allows for the simultaneous execution of multiple jobs, and high-quality proxies ensure that these functions continue smooth and efficient.

When it comes to automating processes, understanding the distinction between private and public proxies is important. Dedicated proxies offer enhanced anonymity and stability, making them perfect for automated tasks requiring reliable performance. In comparison, free proxies, while more available and often costless, can be unpredictable in terms of speed and reliability. Selecting the right type of proxy based on the nature of your automation is essential for enhancing efficiency.

Finally, leveraging advanced proxy validation tools enhances your automated processes strategy by guaranteeing that only functional and fast proxies are used.

Quick proxy scrapers and checkers can confirm the speed and anonymity of proxies in real-time, enabling you to maintain a high level of performance in automated tasks. By meticulously choosing and regularly evaluating your proxy list, you can further optimize your automation processes for improved data extraction outcomes.